Putting the AI in compliance: ChatGPT and FTC warnings

Developments in artificial intelligence have never been more prominent, but what are the compliance implications for fast-evolving AI?

Written by a human

There was a time, not so long ago, where artificial intelligence (AI) was met with skepticism and distrust within financial services. Slowly, slowly, a general acceptance has unravelled in which firms understand that AI can benefit and streamline operations – and that it might not be geared to taking jobs, but instead assisting staff with existing tasks.

This gradual trust and understanding of AI has led to a number of developments. Firstly, vendors are increasingly developing, using, and promoting their use of AI within their products. Secondly, AI development has accelerated fast – as more people use it, demand grows, and more advanced AI is produced. In particular, this can be seen in the recent “hype” around ChatGPT, which is swiftly being barred from use by the compliance teams of financial institutions.

What is ChatGPT?

ChatGPT is first and foremost an AI chatbot. It is a Large Language Model (LLM), developed by OpenAI, which answers users’ questions with what it infers to be the correct answer.

ChatGPT learns as it goes by gleaning context from conversations to deliver more accurate results. So, the more an individual interacts with it, the more exact the results will be. As well as answering simple queries, ChatGPT has been shown to be advanced enough to pass exams, write articles, and play chess.

While advanced, the data used to train ChatGPT cut off in 2021, which means it is unaware of anything that happened after that final training day. As well as this, it is unable to verify whether information is factual and cannot provide references for its answers, meaning sometimes they are inaccurate or incorrect.

Why are banks banning the use of ChatGPT?

It is often said that ignorance is bliss. However, where compliance is concerned, ignorance is risk.

The industry response to ChatGPT appears to have been incredibly swift, given that the new technology was introduced late in November 2022. However, realistically, this may not be due to any perceived danger, but more a result of business-as-usual technology controls within financial institutions.

Most banks will have in place policies and processes for the management of new technology. Some policies will likely restrict the use of new technology until a risk assessment has been completed, usually by the IT team. Only then will a firm decide whether or not the technology poses benefits to the firm. Indeed, it was reported by Retail Banker International that JP Morgan’s move to restrict employees from using ChatGPT was not triggered by any incident, but was a result of existing controls around third-party software.

Of course, away from simple policy rationale, there is worry among some about the potential uses for ChatGPT within firms. At a recent Hedge Fund Roundtable hosted by Global Relay, some attendees expressed concern about quantitative analysts, or ‘quants’, using the new technology to input on research for their models. There are questions about how far the technology could be used to assist or enhance a person’s day job, too. This is of particular concern where the verification of the information it provides is often lacking.

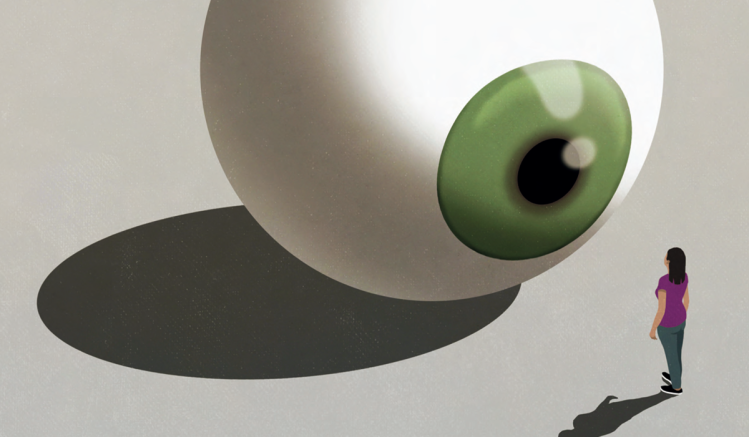

The real issue with ChatGPT seems to exist in its potential uses, rather than what it has been used for so far. Currently, ChatGPT enables individuals to have unsupervised interactions with AI or, as Responsible Innovation expert Chris Leong describes it, with “non-deterministic algorithmic technologies” – leading to potentially disastrous results.

This is especially true given the pace of evolution. The risk lies in the fact that it is not clear – or not known – the internal mechanics that allow the technology to operate, nor how it may operate in the future. This opens the floor to compliance issues, ranging from data privacy through to the dissemination of inaccurate investment information. There may also be compliant communication issues, though these are yet to be fully explored.

Do not lie about your AI

On the topic of risk, in the same week that it was reported that banks were banning ChatGPT, the U.S. Federal Trade Commission (FTC) asked businesses to “keep your AI claims in check”.

The FTC has acknowledged that there is currently an “AI hype” and that “some advertisers won’t be able to stop themselves from overusing and abusing” terms such as ‘AI’. For the FTC, the concern is that “some products with AI claims might not even work as advertised in the first place” insofar as they may not use AI, or maybe overstating the capabilities of AI-powered products.

The FTC has said that, where firms promote artificial intelligence within their marketing efforts, it will be asking a number of questions, including:

– Are you exaggerating what your AI product can do?

– Are you promising that your AI product does something better than a non-AI product?

– Are you aware of the risks?

– Does the product actually use AI at all?

The FTC wants to see that businesses are able to fully substantiate the claims they make about their use of AI and, “if proof is impossible to get”, such as AI being better than non-AI processes, then “don’t make the claim”. The FTC also notes that its technologists are able to look under the hood of technology to see if internal workings match with any claims.

Ultimately, the FTC wants to make clear that the use of AI in business is important, and that overpromising what it can deliver is irresponsible. It concludes, somewhat ominously, that “you don’t need a machine to predict what the FTC might do” where businesses make unsupported claims about AI.

Putting the AI in compliance

AI, as with any developing technology, poses as much risk as it does reward. The challenge for both regulators and regulated industries alike is understanding where these exist, how to manage them, and how to keep up with their evolution.

Global Relay’s data Connectors allow you to monitor and archive communications across a variety of channels, and supports your firm as it strives for venue completeness. Our robust Connector for ChatGPT Enterprise will help you solidify your compliance strategy and consolidate any and all of your critical communications.