“What should I have for lunch?” might feel like an easy question to answer. But the likelihood is that, when asking yourself that question, you didn’t immediately come to “sandwich” as your final answer without taking several other steps: “what did I have yesterday?”, “what ingredients do I have in the kitchen?”, “how much time do I have to make something?”, and perhaps even “does two doughnuts count as lunch?”

Chain of Thought (CoT) reasoning sees Large Language Models (LLMs) take a similar approach to answering questions while being able to “show their working” and give users a step-by-step breakdown of the steps taken by the LLM to arrive at a particular decision. This increased transparency can not only enable users to check that an LLM has reached the correct decision more easily, it also empowers those working in regulated industries with a greater degree of explainability, and allows models to tackle more complex tasks like communications monitoring.

How does Chain of Thought prompting work?

CoT works to enhance the outputs of LLMs, particularly for tasks involving multi-step reasoning. CoT breaks down complex problems into manageable intermediate steps that sequentially lead to a conclusive answer, presenting the clear, logical steps it has taken to the user.

A simple example of how a CoT process differs from a “single step” LLM prompt exchange would be:

Traditional “single step” LLM prompt

Prompt: What colour is the sky?

Answer: The sky is blue.

Chain of Thought prompt

Prompt: What colour is the sky – explain your answer step by step.

Answer: Earth’s atmosphere constitutes air molecules / these molecules scatter shorter-wavelength light more than longer wavelengths / when sunlight hits the atmosphere, this scattering means blue light more visible to the human eye / this makes the sky appear blue, a primary colour

There are several different approaches to CoT prompting, which include:

- Zero-shot: Leverages the inherent knowledge within a model to conduct step-by-step reasoning without providing examples

- K–shot: Provides K domain expert example reasoning paths for the LLM to utilize when generating its own reasoning paths

- Automatic: Generates effective intermediate reasoning steps automatically, automating the generation and selection of reasoning paths

- Multimodal: Incorporates a wide range of inputs, including text and images, enabling the model to process more diverse types of information

What are the benefits of Chain of Thought prompting?

Chain of Thought reasoning offers firms a range of benefits, which include:

- Enhancing LLM performance, especially for more complex reasoning tasks, offering improved accuracy and greater transparency

- Giving users clearer oversight of intermediate reasoning steps allows them to more easily understand the decision-making process the LLM has employed

- By breaking down a problem into component parts, CoT approaches can lead to more accurate and reliable answers, particularly where tasks require multi-step reasoning, and greater alignment with ethical standards

While offering multiple clear benefits, CoT approaches are not entirely without additional considerations. They require that users input “higher quality” prompts to guide models accurately, which may necessitate additional prompt engineer training. Generating and processing prompts requiring multiple reasoning steps naturally requires more compute power and time compared to single step prompting, which can make implementation more costly for organizations looking to establish their own CoT LLMs, making identifying a partner with a CoT-capable LLM already in use a much more cost-effective solution.

Chain of Thought prompting for compliance

Regulators like the Securities and Exchange Commission (SEC) and Financial Conduct Authority (FCA) require financial services firms take a “risk based” approach to monitoring business communications to identify potential signs of market abuse or other misconduct. This is vital for protecting market integrity, firms’ reputations, and to identify and mitigate risks before regulators take enforcement action, as well as maintaining high levels of explainability about how AI is used.

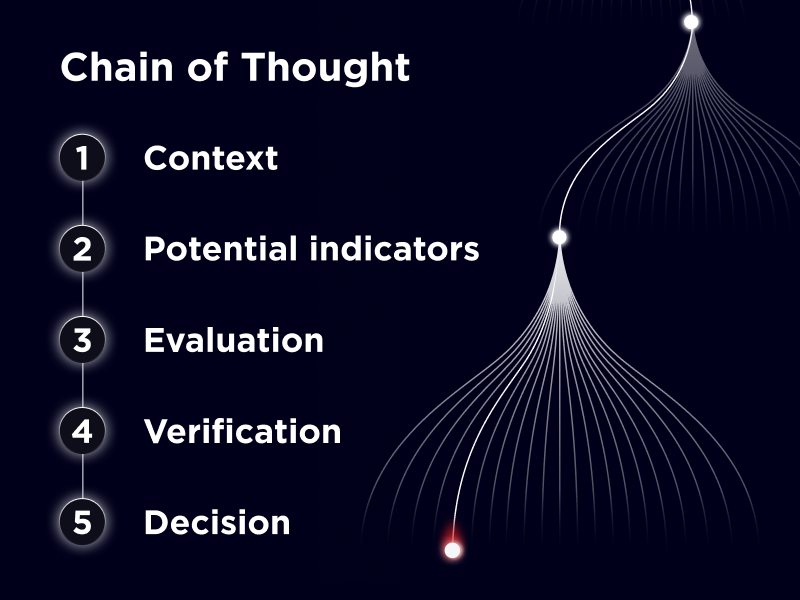

Global Relay’s LLM does not require any additional user training to leverage CoT reasoning for communications compliance. Working as part of our AI-enabled communications monitoring solution, a step-by-step CoT reasoning output may look like:

- Context: The model will summarize the message in question while considering the surrounding conversation and context

- Potential Indicators: The next step identifies any potential indicators of misconduct or regulatory concern

- Evaluation: Potential meanings are interpreted and the model aligns these with known patterns of potential risk

- Verification: The model then verifies whether there is concrete evidence supporting a possible match, such as explicit language

- Decision: A decision is reached based on the above steps, with the model outputting a risk classification and a breakdown of the steps taken to reach this decision

To refer to our earlier example, a single-step prompt of “does this communication contain signs of misconduct” or “is this communication non-compliant” may result in the simplistic answer of “no” – missing vital context clues hidden in the complexity and ambiguity of real-life communications.

By providing reviewers with clear step-by-step reasoning, CoT approaches offer time and resource-poor compliance teams with benefits including increased accuracy, enhanced explainability, and reduced false positives – although perhaps not a solution to the question of what they should have for lunch.

To learn more about how our Large Language Model leverages contextual, step-by-step Chain of Thought to facilitate more accurate, transparent risk identfication watch our dedicated video breakdown.